Delivering reliable, real-time data pipelines without theoverhead

Introduction

Many organizations today are under pressure to shift from static, batch-based reporting to real-time analytics. But in practice, setting upstreaming pipelines that are reliable, maintainable, and production-grade can quickly become complex—and expensive to support.

Teams often find themselves debugging custom Spark jobs, building their own alerting and monitoring stacks, and struggling to enforce data quality at scale. The result? Business stakeholders are promised “real-time” data, but get unreliable or untrustworthy outputs.

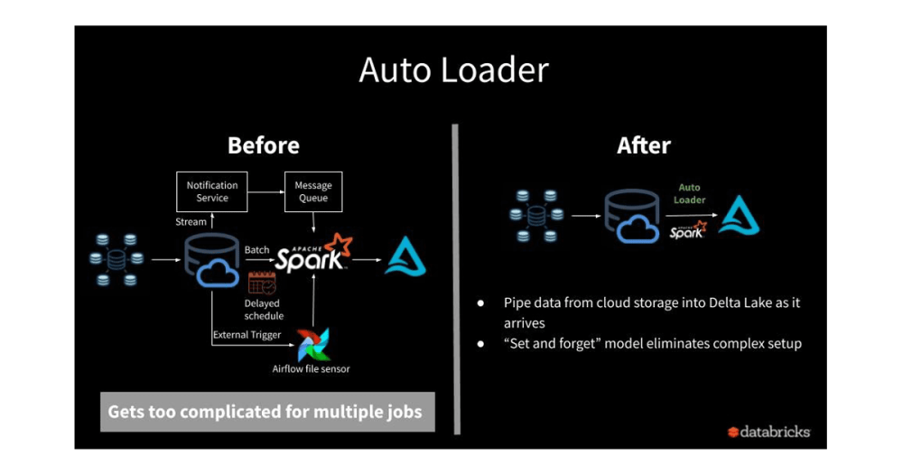

The Delta Live Tables (DLT) Streaming Framework removes these roadblocks. It provides a declarative, governed way to define ETL pipelines that run continuously or incrementally, with built-in monitoring, auto-scaling, and lineage. Our framework wraps this native capability into a deployable accelerator—getting clients up and running with resilient streaming pipelines in days, not weeks.

Why This Matters

Streaming analytics has traditionally been a high-friction area for data engineering teams:

- Building Spark Structured Streaming jobs from scratch creates technical debt.

- Schema enforcement and lineage tracking are difficult to implement consistently.

- Monitoring and alerting require external to ling and are often missing entirely.

- Data quality is hard to enforce across layers(e.g., bronze → silver → gold).

This leads to fragile, hard-to-debug pipelines and low confidence in “real-time” data products.

The DLT Streaming Framework simplifies this dramatically, turning real-time ETL into a managed, governed, and testable workflow—without the usual operational complexity.

How This Adds Value

With this accelerator, clients benefit from:

- Faster implementation: Declarative pipelines are easier to define and test, with less code and lower risk.

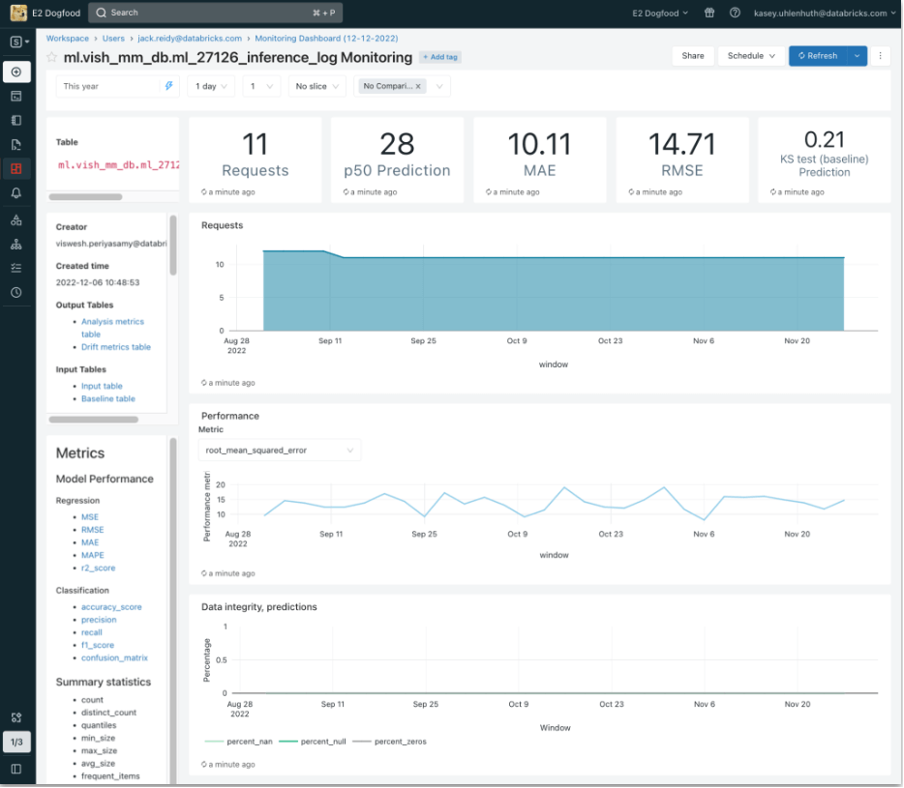

- Built-in observability: DLT tracks pipeline health, throughput, errors, and data quality issues automatically.

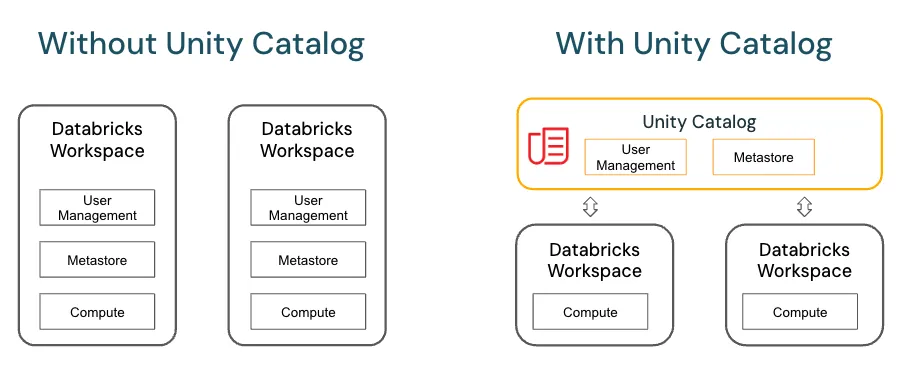

- Governance and trust: All transformations are tracked via lineage and are compatible with Unity Catalog.

- Lower total cost: Less custom code to maintain, fewer production incidents, and easier onboarding for new team members.

Most importantly, this framework shifts real-time data from a fragile experiment to a dependable capability.

Technical Summary

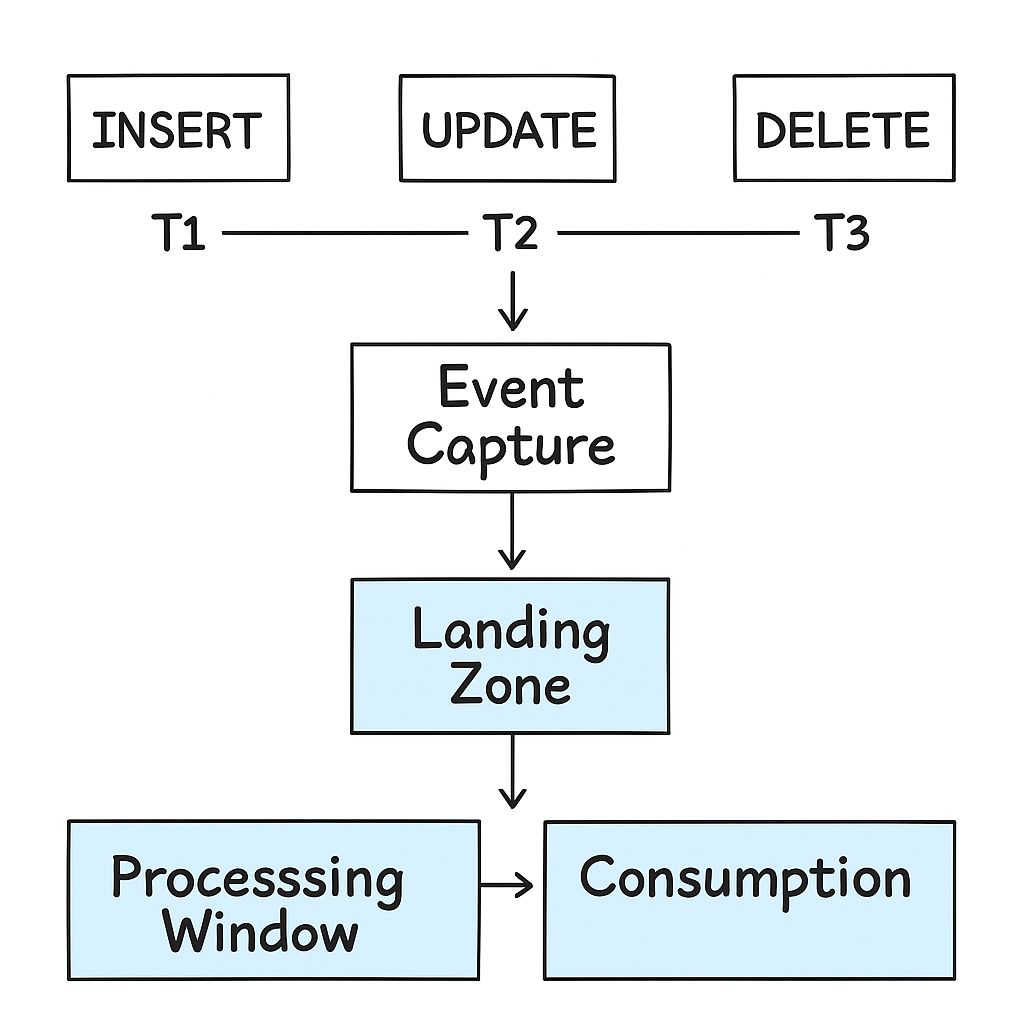

- Pipeline Layers:Bronze (raw), Silver (cleansed), Gold (curated)

- Execution Modes:Continuous or triggered (streaming or micro-batch)

- Core Tooling:Delta Live Tables, Delta Lake, Unity Catalog (optional)

- Built-in Features:

- Data expectations (validation rules)

- Auto-scalingclusters

- Audit logs and DAG visualization

- Delivery Assets:

- TemplateDLT pipeline in SQL or Python

- Config-driven metadata and layer definitions

- Job orchestration via Databricks Workflows or REST API