Data Lineage & Cataloging Accelerator Governance and discovery across lakehouse assets

Introduction

Moderndata platforms demand more than fast pipelines—they require transparency and governance. As companies scale out their lakehouse architecture, they quickly lose track of what data is where, how it was produced, and who is responsible for it. Tables are created ad hoc. Pipelines mutate data without clear documentation. And when questions arise—"Can I trust this metric?", "Who owns this table?", "Why is this dataset out of date?"—answers are hard to find.

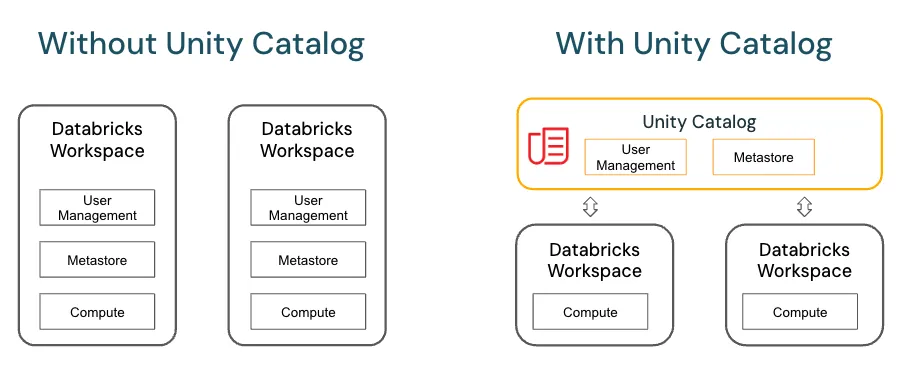

TheData Lineage & Cataloging Accelerator tackles this challenge by helping clients surface and manage metadata in a consistent, governed way. It automates lineage capture across ingestion and transformation pipelines, enables asset registration in Unity Catalog, and supports data discovery and ownership practices that scale. With this solution, clients lay the groundwork for responsible data use, regulatory compliance, and cross-team collaboration.

Why This Matters

Many clients have mature pipelines and petabytes of data—but they lack the transparency needed to understand what feeds their reports, which assets are authoritative, or how data flows across layers. This leads to duplication, tribal knowledge, and friction in governance.

- Unknown data origin leads to duplication and misuse.

- Lack of ownership makes issue resolution slow.

- Regulatory audits become high-risk and manual.

- Data consumers don’t know which table to trust.

Lineage and metadata aren’t just governance checkboxes—they’re tools that empower producers and consumers to collaborate safely and confidently.

How This Adds Value

The accelerator simplifies the rollout of discoverability, governance, and auditability standards by unifying metadata capture and surfacing it in ausable way. It helps both data teams and business stakeholders navigate the lakehouse with more trust.

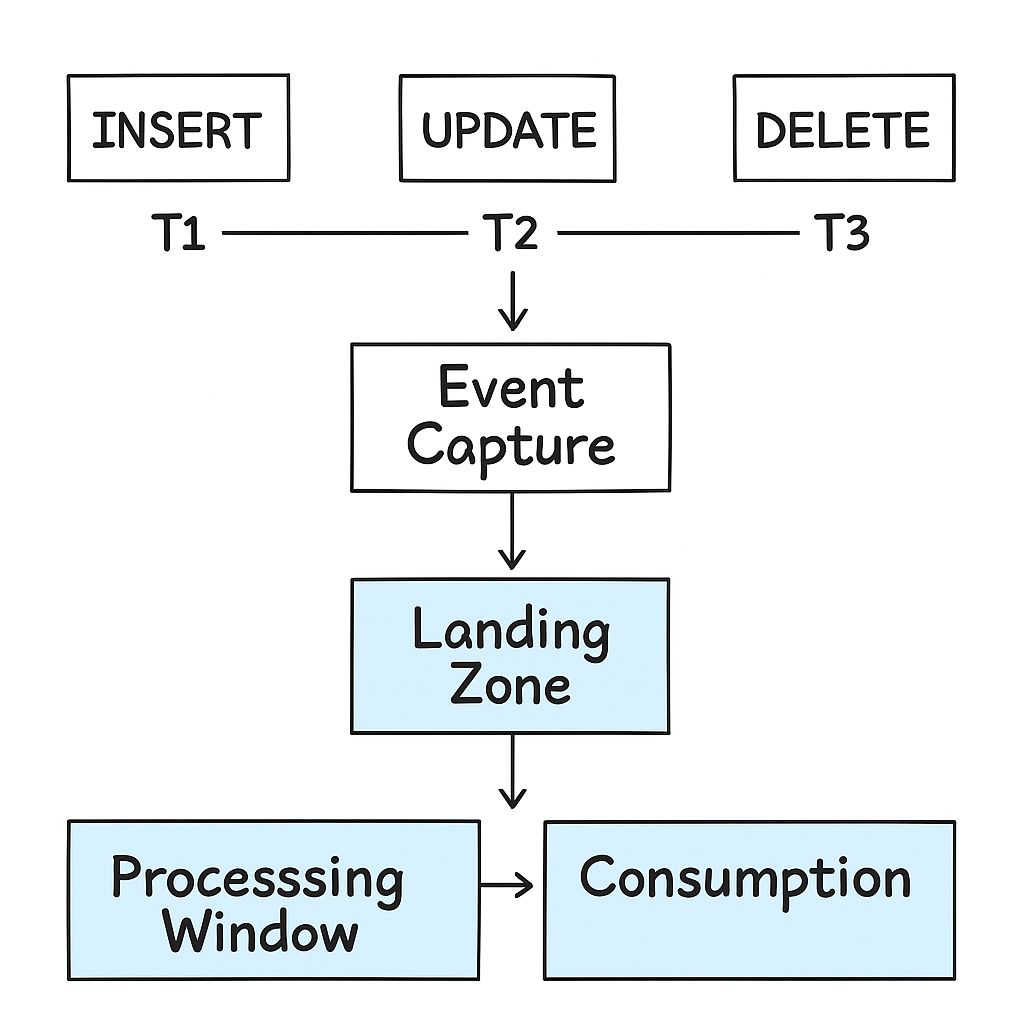

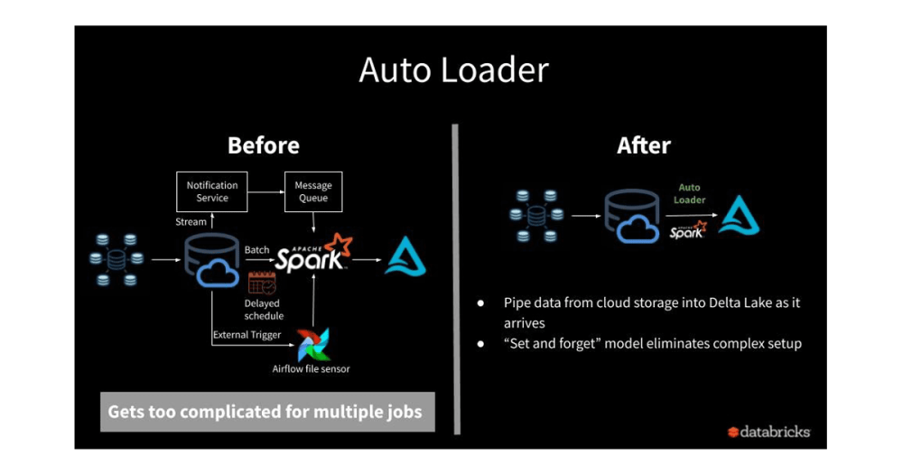

- Automates lineage capture across ingestion and transformation layers.

- Surfaces metadata like owner, freshness, and usage patterns.

- Boosts discoverability and responsible data use across departments.

- Lays foundation for future governance, data contracts, and access policies

Technical Summary

- Tools: Unity Catalog, DLT pipelines, Databricks system tables

- Metadata Types: Owner, created/modified date, last query access, tags

- Lineage: Table/table relationships via DLT or manual annotations

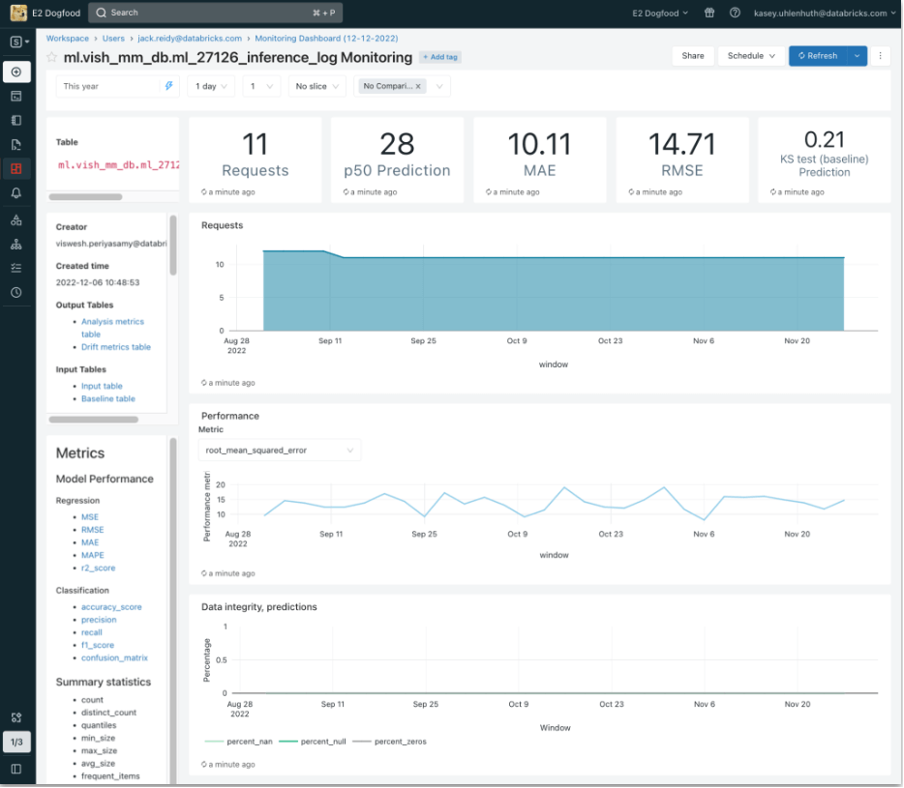

- Output: Searchable metadata layer or dashboards

- Assets: Metadata collector notebook, lineage visualizer, sample Unity Catalog policies