Cost Monitoring & Optimization Toolkit Operational visibility into Databricks usage and spend.

Introduction

As organizations expand their Databricks usage, cost oversight becomes critical.While Databricks is elastic and scalable, it's also easy to overspend when jobsrun inefficiently or clusters are left running. Different teams may own their own workspaces or jobs, yet few have clear insight into what their activities are costing. Finance teams struggle to allocate budgets accurately, and platform leads lack the tools to detect anomalies or prevent runaway compute usage.

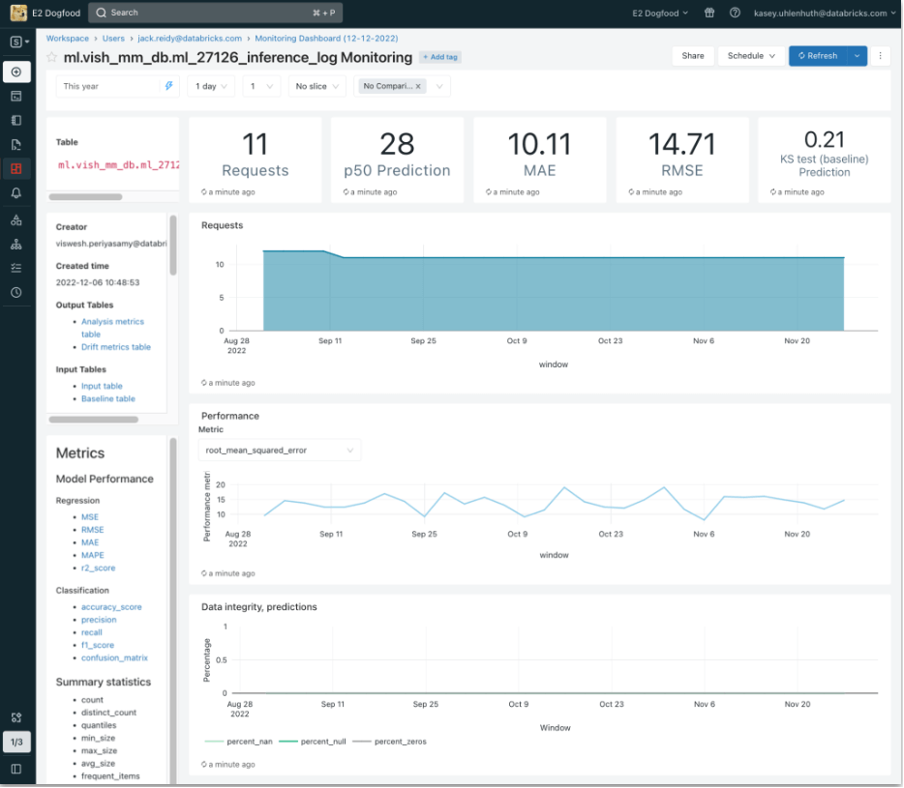

TheCost Monitoring & Optimization Toolkit addresses this challenge by providing a structured, automated way to ingest Databricks usage logs, transform them into human-readable cost and usage metrics, and expose these through intuitive dashboards. It enables organizations to monitor trends, flag inefficiencies, and break down costs by user, cluster, or job—all while remaining platform-native and scalable.

Why This Matters

Cloud costs have a habit of creeping up unnoticed—especially in shared or flexible platforms like Databricks. Teams may spin up oversized clusters, forget to terminate interactive notebooks, or run unoptimized queries that consume hours of unnecessary compute. Platform owners struggle to assign accountability or forecast costs accurately.

Without cost visibility:

- Teams over-provision clusters or run long, inefficient queries.

- Business units are billed inaccurately or not at all.

- Platform owners can’t enforce budget guardrails.

- Optimization efforts are reactive rather than proactive.

This toolkit creates a culture of accountability and enables data leaders to proactively manage usage patterns, detect inefficiencies, and align consumption with business value.

How This Adds Value

By centralizing usage data and surfacing insights in dashboards, the accelerator helps organizations make smarter operational decisions. Finance and data teams benefit from aligned visibility, allowing more accurate budget planning and chargeback modeling.

- Enables cost accountability through per-user/job metrics.

- Supports chargeback and showback models for finance operations.

- Surfaces anomalies like unused clusters or failed job retries.

- Helps clients forecast and control spend more reliably.

Technical Summary

- Data Source: Databricks usage logs (via system tables or REST API)

- Storage: Delta Tables for structured cost history

- Visuals: Dashboards in Databricks SQL or Power BI

- Optional: Alerts for idle compute or spikes, auto-tagging for cost attribution

- Assets: SQL models, ingest notebook, dashboard templates